How to Upload Local Python Environment on Domino Datalab

Why I moved from Google Colab and Amazon SageMaker to Saturn Deject

When it comes to scientific experimentation and collaboration, people tend to need the aforementioned thing: an easy-to-use interface to hack and optimize their algorithms, a information input-output system, and the support for their preferred programming language. A natural solution to these issues emerged in 2011 with the release of Jupyter, an extremely versatile web awarding that allows you to create a notebook file that serves equally an interactive lawmaking interface, a data visualization tool, and a markdown editor.

Jupyter Cloud Solutions

There are many means to share a static Jupyter notebook with others, such as posting it on GitHub or sharing an nbviewer link. Still, the recipient can but interact with the notebook file if they already accept the Jupyter Notebook surround installed. Just what if you want to share a fully interactive Jupyter notebook that doesn't require whatever installation? Or, y'all want to create your ain Jupyter notebooks without installing annihilation on your local motorcar?

The realization that Jupyter notebooks have go the standard gateway to machine learning modeling and analytics amongst information scientists has fueled a surge in software products marketed every bit "Jupyter notebooks on the cloud (plus new stuff!)". Just from retentiveness, here'south a few company offerings and startup products that fit this description in whole or in part: Kaggle Kernels, Google Colab, AWS SageMaker, Google Deject Datalab, Domino Information Lab, DataBrick Notebooks, Azure Notebooks…the list goes on and on. Based on my conversations with boyfriend data science novices, the 2 almost popular Jypyter cloud platforms seem to be Google Colab and Amazon SageMaker.

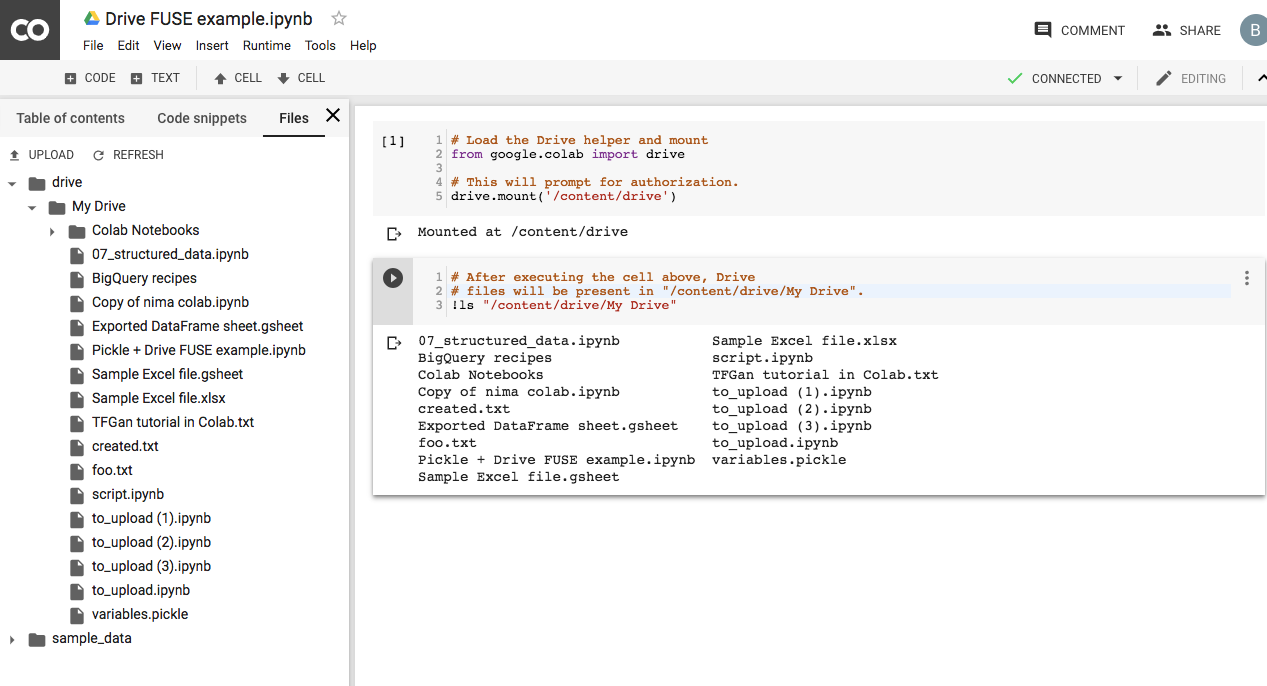

Google Colab

Google Colab is ideal for everything from improving your Python coding skills to working with deep learning libraries, like PyTorch, Keras, TensorFlow, and OpenCV. You tin can create notebooks in Colab, upload notebooks, store notebooks, share notebooks, mountain your Google Drive and use whatsoever yous've got stored in there, import about of your favorite directories, upload your personal Jupyter Notebooks, upload notebooks directly from GitHub, upload Kaggle files, download your notebooks, and do just about everything else that you lot might want to exist able to exercise.

Visually, the Colab interface looks quite similar to the Jupyter interface. However, working in Colab really feels very dissimilar to working in the Jupyter Notebook:

- Almost of the bill of fare items are different.

- Colab has changed some of the standard terminologies ("runtime" instead of "kernel", "text cell" instead of "markdown prison cell", etc.)

- Colab has invented new concepts that you have to empathise, such every bit "playground fashion."

- Command fashion and Edit mode in Colab work differently than they do in Jupyter.

A lot has been written about Google Colab troubleshooting, so without going down that rabbit hole, here are a few things that are less than platonic. Because the Colab menu bar is missing some items and the toolbar is kept very simple, some actions tin can only be washed using keyboard shortcuts. You can't download your notebook into other useful formats such equally an HTML webpage or a Markdown file (though you tin download it as a Python script). You can upload a dataset to apply within a Colab notebook, simply it will automatically be deleted in one case you end your session.

In terms of the ability to share publicly, if you cull to brand your notebook public and you share the link, anyone tin admission it without creating a Google account, and anyone with a Google business relationship tin can copy it to their own account. Additionally, you can authorize Colab to save a copy of your notebook to GitHub or Gist so share it from in that location.

In terms of the power to interact, yous can keep your notebook private simply invite specific people to view or edit information technology (using Google'south familiar sharing interface). You and your collaborator(s) can edit the notebook and see each other's changes, every bit well equally add together comments for each other (similar to Google Docs). Notwithstanding, your edits are not visible to your collaborators in real-fourth dimension (there'southward a delay of up to 30 seconds), and there'due south a potential for your edits to get lost if multiple people are editing the notebook at the same fourth dimension. Too, you are not actually sharing your environment with your collaborators (significant there is no syncing of what code has been run), which significantly limits the usefulness of the collaboration functionality.

Colab does give you lot access to a GPU or a TPU. Otherwise, Google does not provide whatsoever specifications for their environments. If you connect Colab to Google Drive, that will give you up to 15 GB of disk space for storing your datasets. Sessions will shut down afterwards 60 minutes of inactivity, though they tin can run for up to 12 hours.

The greatest strength of Colab is that it's easy to get started, since almost people already have a Google account, and it'south easy to share notebooks since the sharing functionality works the same as Google Docs. Yet, the cumbersome keyboard shortcuts and the difficulty of working with datasets are significant drawbacks. The power to collaborate on the same notebook is useful; simply less useful than it could be since you're non sharing an environment and you can't collaborate in real-time.

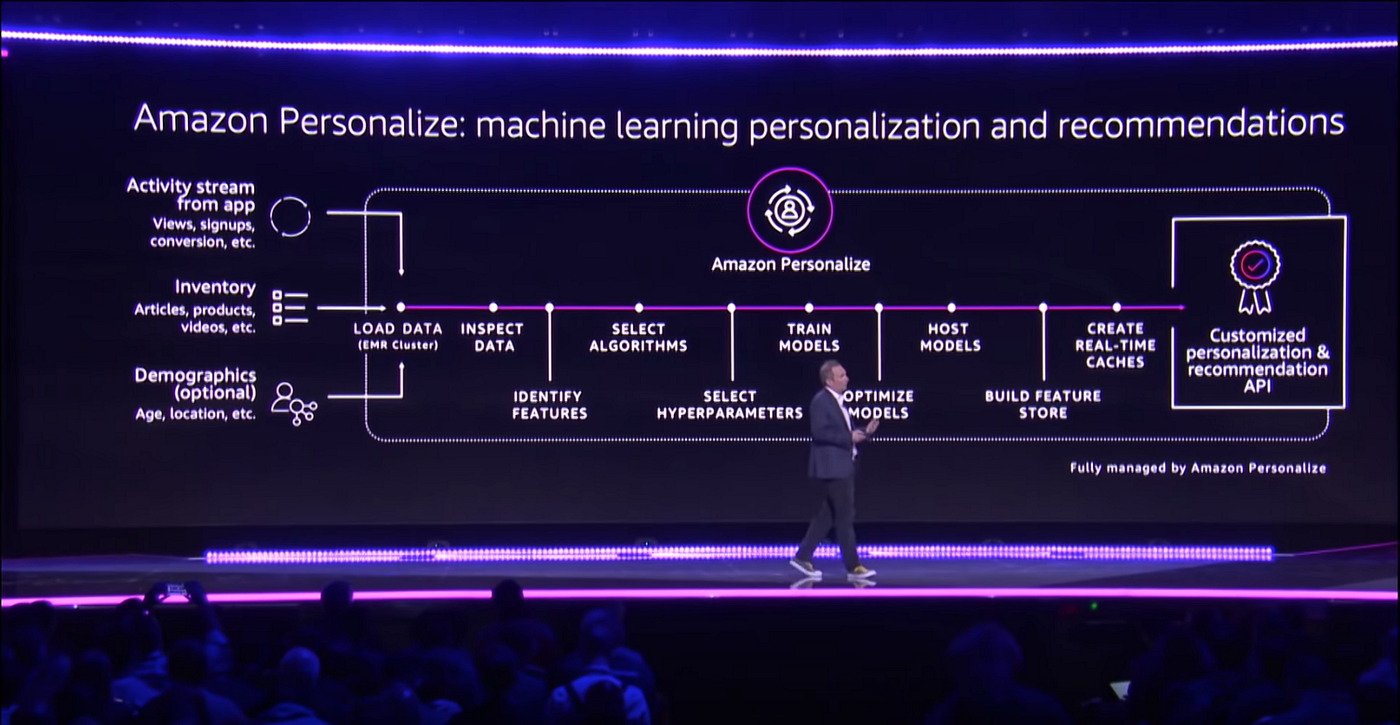

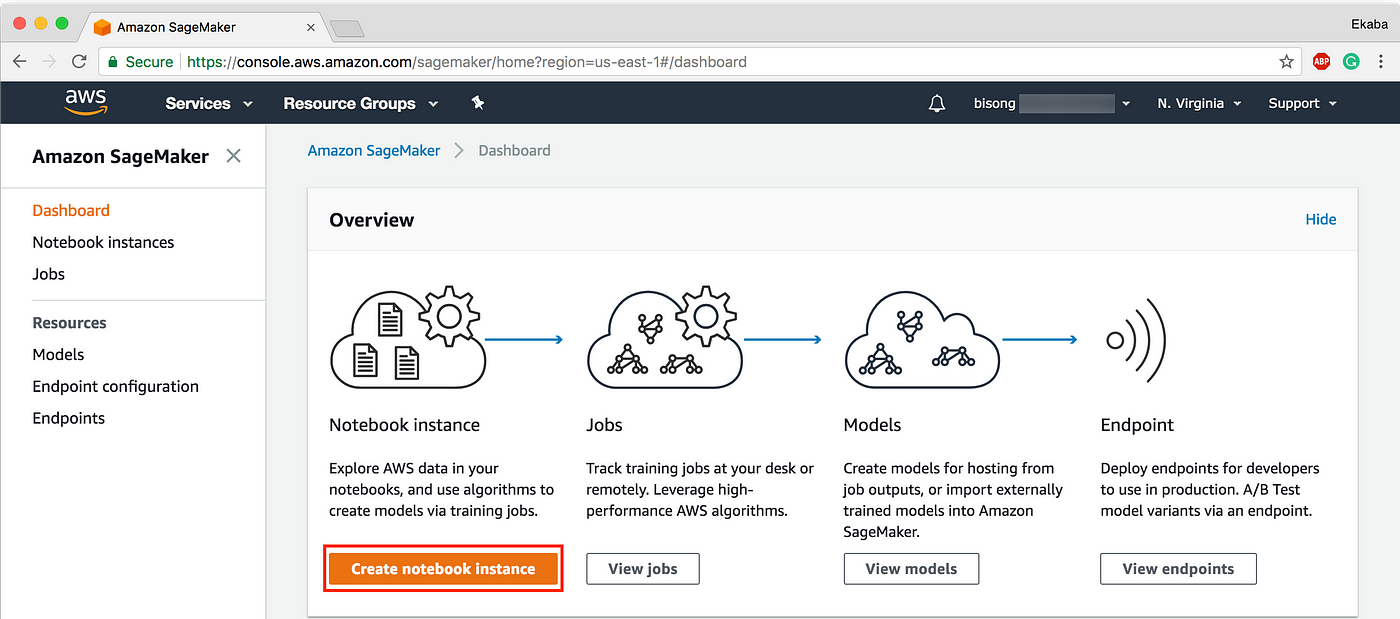

Amazon SageMaker

Amazon SageMaker is a fully managed machine learning service that helps data scientists and developers to quickly and hands build & train models, so straight deploy them into a production-fix hosted environment. It provides an integrated Jupyter authoring notebook instance for like shooting fish in a barrel access to your datasets for exploration/assay, so y'all don't accept to manage servers. It also provides mutual ML algorithms that are optimized to run efficiently against extremely larger data in a distributed environment. With native support for bring-your-own algorithms and frameworks, Amazon SageMaker offers flexible distributed preparation options that adjust to your specific workflows.

First, you lot spin up a and then-called "notebook instance" which will host the Jupyter Notebook application itself, all the notebooks, auxiliary scripts, and other files. No need to connect to that instance (actually you cannot, even if wanted to) or gear up information technology up in any way. Everything is already prepared for you to create a new notebook and use it to collect and prepare some data, to define a model, and to get-go the learning process. All the configuration, provisioning of compute instances, moving of the data, etc. will be triggered literally with a unmarried office phone call. This groovy process dictates a certain arroyo to defining the models and organizing the data.

SageMaker is built on top of other AWS services. Notebook, training, and deployment machines are merely ordinary EC2 instances running specific Amazon Auto Images (AMI). And information (as well results, checkpoints, logs, etc.) is stored in S3 object storage. This might be troubling if you are working with images, videos or any huge datasets. The fact is that y'all accept to upload all your data to S3. When you configure the grooming you tell SageMaker where to notice your information. SageMaker so automatically downloads the data from S3 to every training instance before starting the preparation. Every time. For a reference, information technology takes effectually 20 minutes to download 100Gb worth of images. Which means that y'all have to wait at least 25 minutes before the training begins. Proficient luck debugging your model! On the other mitt, when all preliminary trials are washed elsewhere and your model is already polished, the grooming experience is very smoothen. Only upload your data to S3 and get interim results from there too.

Another aspect for consideration is pricing. Notebook instances can be very cheap, especially when there is no need to pre-process the data. Training instances, on the other hand, may easily burn down a hole in your pocket. Cheque here all the prices, every bit well every bit the list of regions where SageMaker has already been launched.

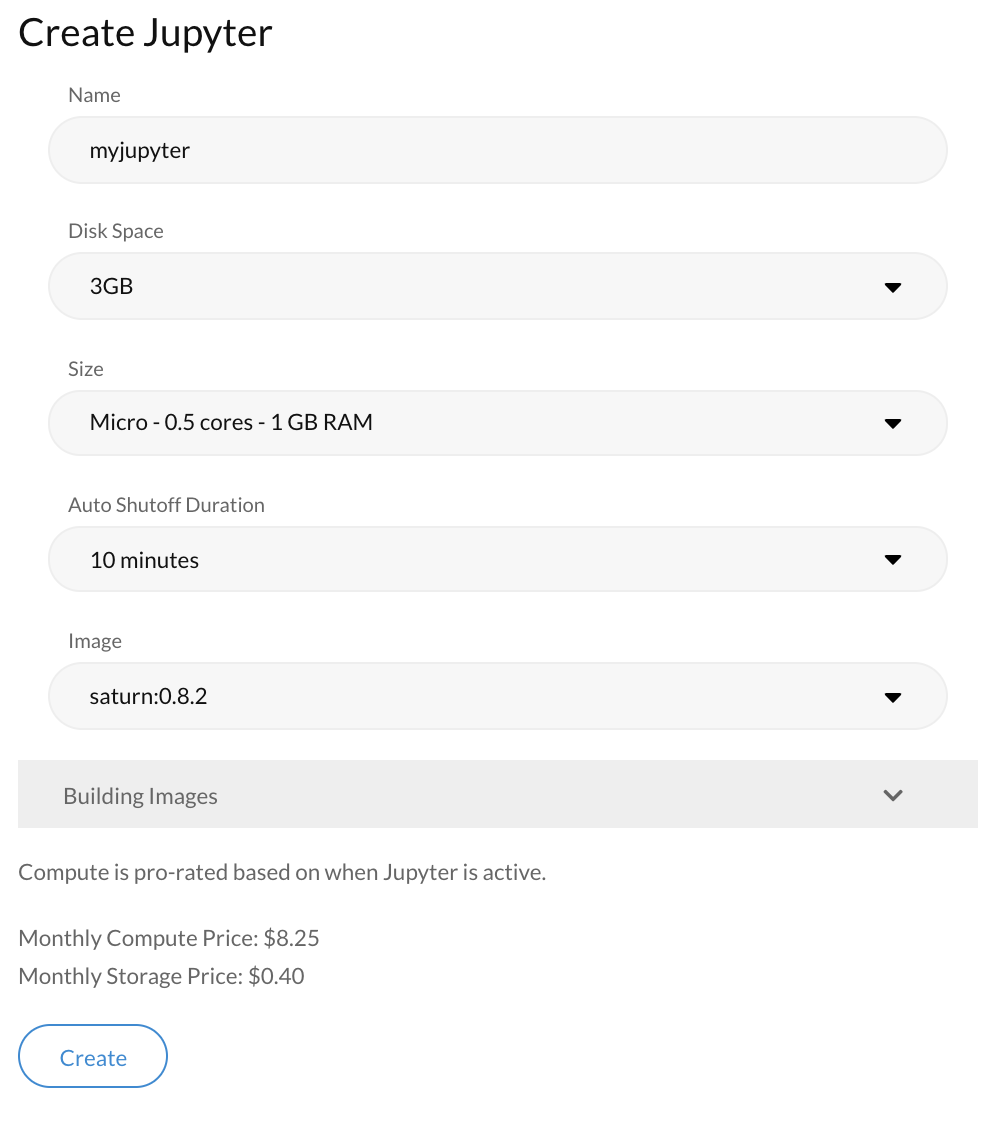

Introducing Saturn Cloud

Saturn Deject is a new child on the block that provides data scientists who are non interested in setting upwards infrastructure but intendance more about doing data scientific discipline easily. More specifically, the platform helps manage Python environments in the cloud.

You lot can get started with the gratuitous tier after signing upwardly for an business relationship. In the dashboard, you can create a Jupyter Notebook for a project by choosing the disk space and the size of your motorcar. The configurations cover requirements for a lot of practical data science projects. Furthermore, yous can ascertain automobile-shutoff duration for your project, which would proceed your project from shutting downwardly because of inactivity.

It'south extremely easy to share notebooks via Saturn Cloud. I did a previous project that explores the Instacart Market Basket Analysis challenge, and you tin can view the public notebook here: https://www.saturncloud.io/yourpub/khanhnamle1994/instacart-notebooks/notebooks/Clan-Rule-Mining.ipynb. I especially enjoyed how the code blocks and the visualization get rendered without any clutter as nosotros come across with the Google Colab notebooks. It looks only like a report as information technology is intended to be. I also like the "Run in Saturn" option provided where users can simply go and click over to run this lawmaking by themselves without any need for an explicit login.

Overall, using Saturn Cloud makes it piece of cake to share your notebooks with other teammates without having to deal with the hassle of making sure they accept all the correct libraries installed. This sharing adequacy is superior compared to Google Colab.

Furthermore, for those of yous who have had the issue of running notebooks locally and running out of memory, it also allows you to spin upwards Virtual Machines with the memory and RAM required and only pay for what y'all use. This cost-association is a tremendous advantage compared to Amazon SageMaker.

There are also a few other bells and whistles that really mitigate some of the complexities of work typically done from a DevOps perspective. That's what makes tools like this groovy. In many means, until you work in a corporate surroundings where fifty-fifty sharing a basic Excel certificate can become cumbersome, it is almost hard to explain to younger data scientists why this is so neat.

Conclusion

Saturn Cloud is still far from being production-ready, but information technology's a very promising solution. I personally think that it is the merely solution so far that comes close to the ease-of-use of a local Jupyter server with the added benefits of deject hosting (CVS, scaling, sharing, etc.).

The proof of concept is indeed there, and it merely lacks some polish around the angles (more language support, improve version control, and easier point-and-click interface). I am excited to see the future version(s) of the Saturn platform!

References

- Six like shooting fish in a barrel ways to run your Jupyter Notebook in the cloud . Information Schoolhouse. Kevin Markham, March 2019.

Source: https://towardsdatascience.com/why-i-moved-from-google-colab-and-amazon-sagemaker-to-saturn-cloud-675f0a51ece1

0 Response to "How to Upload Local Python Environment on Domino Datalab"

Post a Comment